Project Description

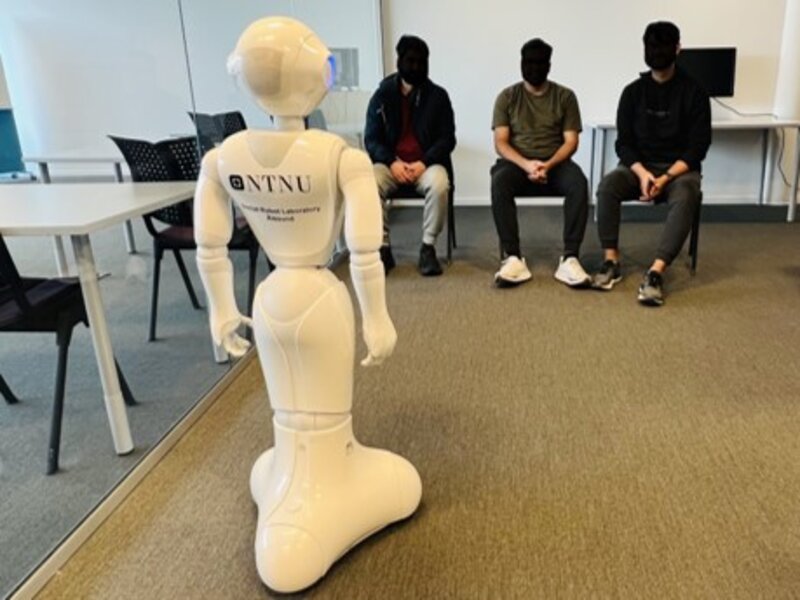

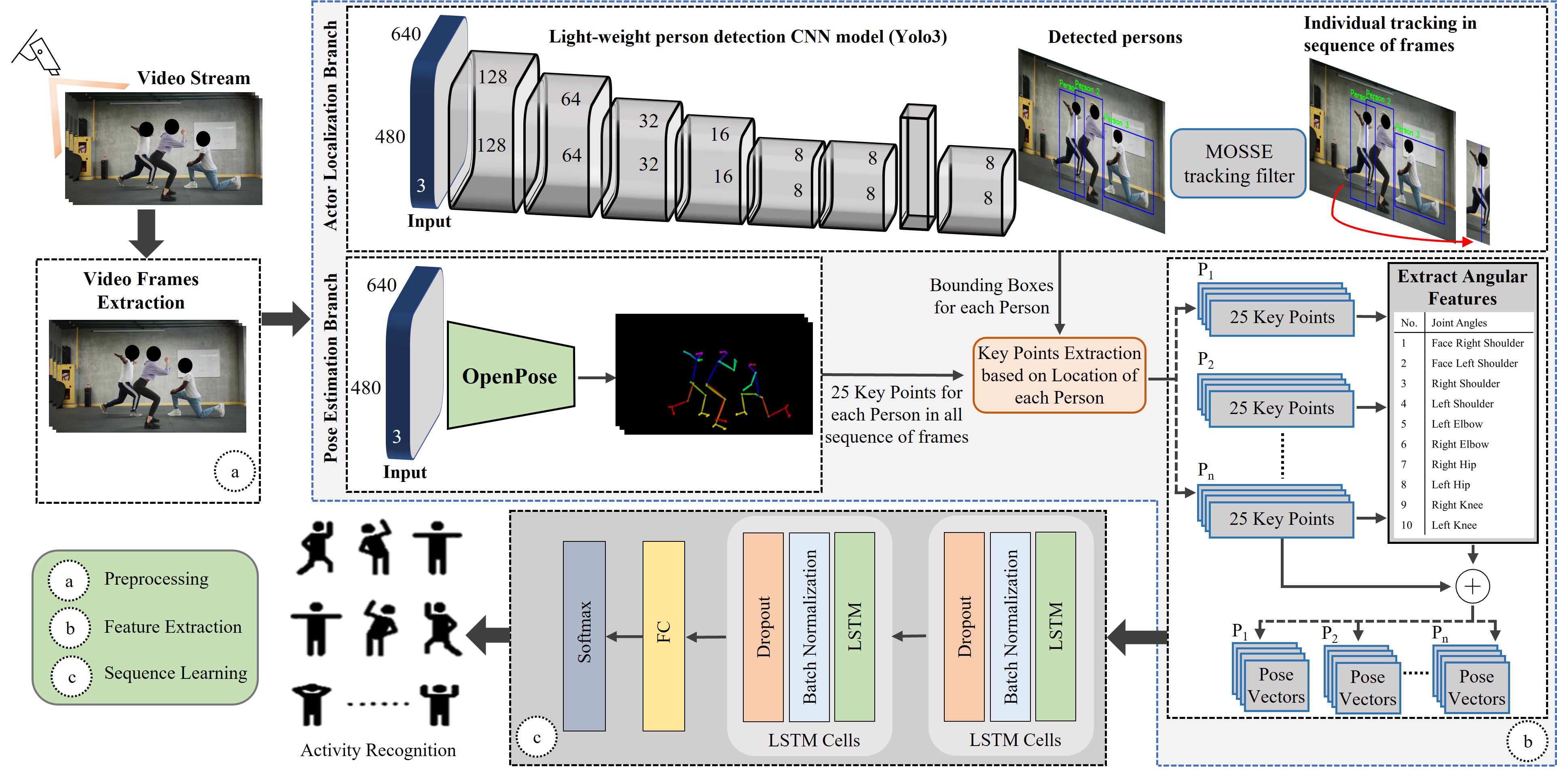

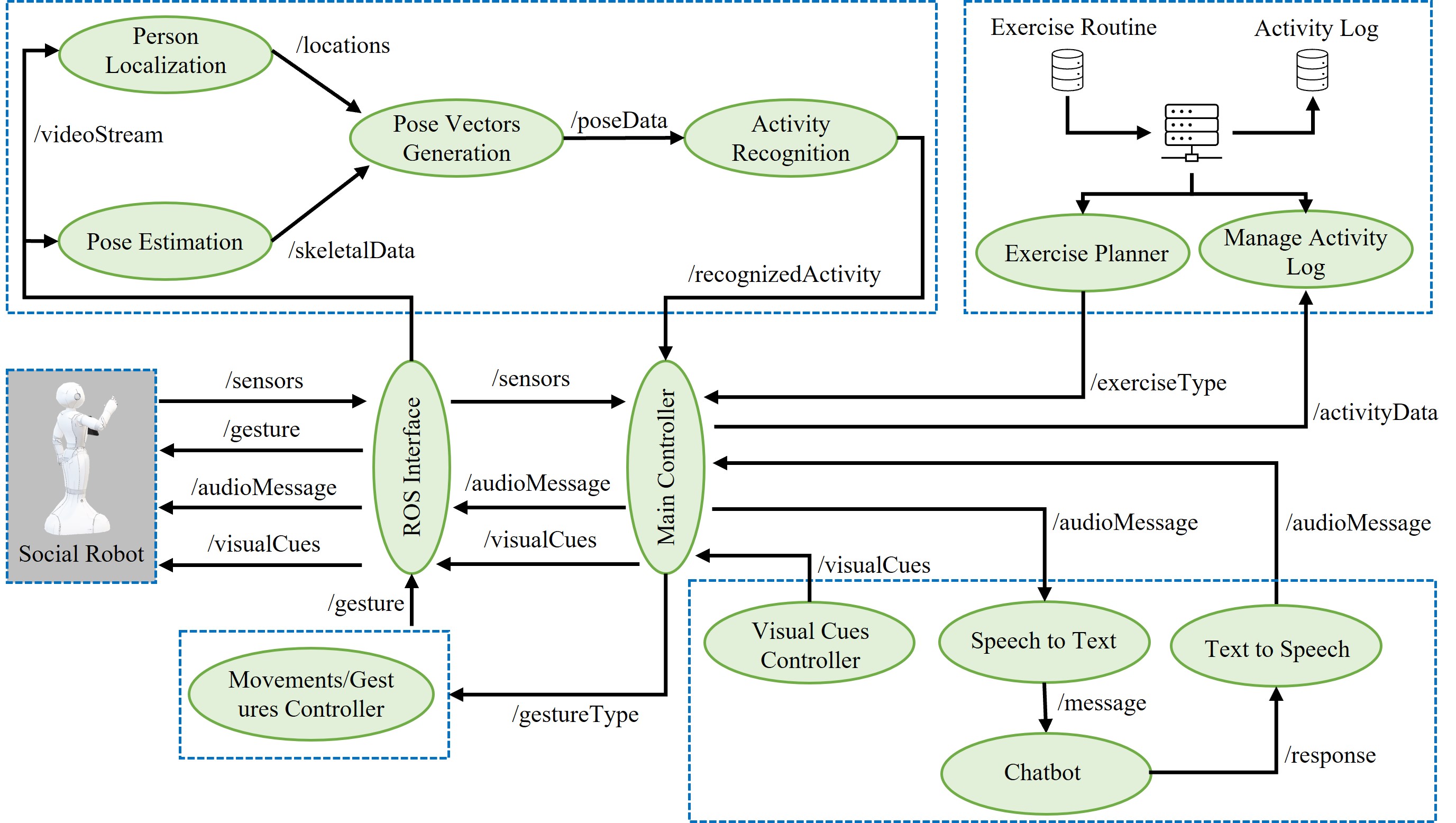

Designed and developed an efficient and lightweight multiperson activity recognition framework for robot-assisted healthcare applications. Initially, invited users and collected video data involving physical rehabilitation activities for training deep learning algorithms. Then developed a LSTM-based deep learning framework for activity recognition. Finally, developed a ROS-based application to deploy the framework on a social robot named ‘Pepper’. Then conducted a user study to understand the user experience with developed social robot application.

Skills: Python, Keras, LSTM, OpenCV, NumPy, ROS, User research

Link to Paper